As I work through the Hugging Face smolagents course, I’ve come to appreciate the power of tools in making AI agents more effective. To deepen my understanding, I decided to take a hands-on approach: I built a set of smolagents tools, documented key takeaways, and published them on Hugging Face for others to use and critique. In this article, I’ll share five essential tools and practical tips for building smarter AI agents.

What Are Tools in SmolAgents?

Tools are pieces of (deterministic) code that enable agents to interact with the world and perform specific tasks. In SmolAgents, tools are Python functions that your LLM will be able to access at will. Tools can be anything: simple calculations, complex API interactions, etc.

Anatomy of a Tool

A SmolAgents Tool typically includes the following things. See the code snippet bellow for example.

- Name: Identifies the tool.

- Description: Explains its purpose.

- Inputs: Defines expected parameters.

- Output Type: Specifies the returned data format.

- Forward Function: The executable logic.

class WeatherTool(Tool):

name = "get_current_weather"

description = "Fetches current weather for a given location"

inputs = {python dictionary}

output_type = "string"

def forward(self, location: str) -> str:

# Fetches weather data from API

# Returns weather details

Tools complement LLMs well

While the LLM provides reasoning and decision-making, tools execute tasks accurately because they are made of deterministic code. In other words, the LLM is the brain and the Tools do the work.

This is a great application of the programming practice of “separation of concerns”. Each tool specializes in a task. This improves predictability (tools have deterministic outputs), safety (tools limit the actions agents can take, limiting unwanted behaviors).

Finally, using tools also boosts product speed and reduces costs. In fact, you can offload complex calculations or reduce context windows through API calls.

Pre-built tools: don’t re-invent the wheel

SmolAgents agents come with pre-built tools by default. You can turn them off and set your own for better control by using your_agent = CodeAgent(..., add_base_tools=True). Also, you can load tools from the community by using from smolagents import load_tool

4 tips for faster and more reliable SmolAgents Tools

Through testing various agents and tools, I found the following improvements to make your faster and more reliable.

Tip 1: Clear Tool Names and Descriptions

Why does that matter? The name and description of each for your tool will be automatically added to the system prompt of your agent. This means, your agent will know what tool is accessible and what’s their purpose, only based on these two informations alone.

Clarity is key. The examples below show a bad and a good example. It will help if you describe precisely the purpose, input and output of the tool.

Bad example:

name = "get_weather"

description = "Gets the weather for a location."

Good example:

name = "get_current_weather"

description = "Get the current weather conditions for a specific location.Input: City name as a string (e.g., 'Paris, France' or 'New York, USA'). Output: Returns a detailed weather report as a sentence including: current temperature in celsius, humidity percentage, precipitation chance, general conditions. Example input: 'London, UK'. Example output: 'The current weather in London, UK is 18°C with 65% humidity and a 30% chance of rain. Conditions are partly cloudy."

Tip 2: Detailed Input Formats

Input descriptions are defined in the inputs dictionary of your tool class. Ambiguous input descriptions lead to errors and retries. Being very specific about input formats helps a lot. See below.

Bad example:

inputs = {"date": {"type": "string", "description": "The date."}}

Good example:

inputs = {"date": {"type": "string", "description": "Date in YYYY-MM-DD format (e.g., '2024-03-15')."}}

Tip 3: Tools should return llm-friendly information

The output of the forward function (mandatory in the tool) will be computed by LLM. This output will be the basis on which the LLM will decide wether or not it has enough information to send a final answer to the user.

With that in mind, it’s easy to understand that adding context in the answer will reduce the error rate. See below for a concrete example.

# bad example:

def forward(self) -> str:

return "28.5,0.6,1013"

# good example:

def forward(self) -> str:

return "The temperature is 28.5°C with 60% humidity and 1013 hPa pressure."

Tip 4: Error logging should also be verbose

Your tools may fail, for many possible reasons. The first “person” to read the error log will be the LLM, it will try to solve the problem on its own. So clear error messages help the LLM troubleshoot issues, and adjust.

So when developing tools, think through all the various ways your tool could fail, and return verbose errors. Use the Try, Except system from python.

def forward(self, location: str) -> str:

try:

data = get_weather_data(location)

return f"Weather in {location}: {data['temp']}°C, {data['conditions']}"

except LocationNotFoundError:

return "Invalid location. Please provide a valid city name."

except APIError as e:

return f"Weather service error: {str(e)}. Try again later."

The SmolAgents tools are available for free on HuggingFace

Tool names and IDs

- Date and Time Tool (id: get_current_date_and_time) - Get current date, day of the week, time, for the user’s timezone.

- User Input Tool (id: user_input) - Ask user for a clarifying question.

- User Location Tool (id: get_user_location) - Get the user’s location from their IP.

- Final answer tool (id: get_user_location) - Send the final answer to the user.

- Web Search Tool (DuckDuckGoSearchTool from Hugging face) - Get the top ten results for a query.

You can download them for free on my HuggingFace hub. You can also load them onto your agent using the load_tool() function. See the code below.

from smolagents import load_tool, CodeAgent

downloaded_tool = load_tool(

"prige/tool_name", # "{used_id}/{tool_id}"

trust_remote_code=True

)

1/ User Location Tool

The User Location Tool retrieves a user's approximate location based on their IP address. This capability is crucial for AI agents that need to provide location-aware responses, such as local business recommendations, weather updates, or simply give the time of day.

I coded this tool following the class-based implementation (not the decorator-based approach), so that the tool could be published on HF’s website. The class-based implementation also allows for simpler definition of name, description, inputs and outputs, and the creation of methods inside the class that help create better separation of concerns when building complex tools.

Example: imagine an AI chatbot that helps users find nearby restaurants. When a user asks: "What restaurants are open near me?" The chatbot can call the User Location Tool to retrieve the user's city and region. This location data can then be used to query an external API (e.g., Google Places API or Yelp) to fetch restaurant recommendations. By embedding geolocation capabilities into AI agents, this tool enhances contextual awareness, making conversations more relevant and actionable.

This code bellow is available in it’s entirety in the HuggingFace hub here.

class UserLocationTool(Tool):

name = "get_user_location"

description = "This tool returns the user's location based on their IP address. That is all it does."

inputs = {}

output_type = "string"

def __init__(self): # Initialize the logger

# Initialize the logger

def _validate_location_data(self, response: Any) -> tuple[bool, str]:

# Validates the API response

async def _call_api(self) -> tuple[bool, Any]:

# Makes the API call to ipinfo.io.

async def forward(self) -> str:

# Send the request to the API

success, response = await self._call_api()

if not success:self.logger.error(response)

return f"Error getting user location: {response}"

# Validate response format

is_valid, result = self._validate_location_data(response)

if not is_valid:

self.logger.error(f"Data validation failed: {result}")

return f"Error getting user location: {result}"

self.logger.info(f"Successfully retrieved location: {result}")

return result

2/ Date and Time Tool

The Date and Time Tool provides the current time, date, and day of the week for a specified timezone. This functionality is essential for AI agents that need to deliver time-sensitive information or adjust their response based on a user’s local time.

This tool is includes a built-in validation system to detect incorrect timezones and suggest valid alternatives.

How the Code Works: The tool expects a timezone string (e.g., 'UTC', 'US/Pacific', 'Europe/London'). The _validate_timezone() function checks whether the provided timezone is valid using pytz. If an invalid timezone is entered, the tool suggests possible correct alternatives back to the LLM. The _format_answer() function structures the output in a human-readable format: "In [timezone], it is [day of the week], [YYYY-MM-DD] at [HH:MM:SS]." The forward() method sends the final thing to the LLM.

This code bellow is available in it’s entirety in the HuggingFace hub here.

class GetCurrentDateAndTime(Tool):

name = "get_current_date_and_time"

description = "This is a tool that gets the current date, day of the week, and time for the specified timezone. Ask user for the timezone or location if not provided."

inputs = {

"timezone": {"type": "string", "description": "Timezone (e.g., 'UTC', 'US/Pacific', 'Europe/London')", "required": True}}

output_type = "string"

def _validate_timezone(self, timezone: str) -> Any:

# Validate and return a timezone object

def format_answer(self, timezone, current_datetime) -> str:

# Format the answer

def forward(self, timezone: str) -> str: # Forward function

from datetime import datetime

try:

tz = self._validate_timezone(timezone)

current_datetime = datetime.now(tz)

return self.format_answer(timezone, current_datetime)

except ValueError as ve: # Re-raise ValueError

raise ValueError(str(ve))

except Exception as e: # Handle any unexpected errors

ValueError(f"Unexpected error getting date and time: {str(e)}")

3/ Web Search Tool

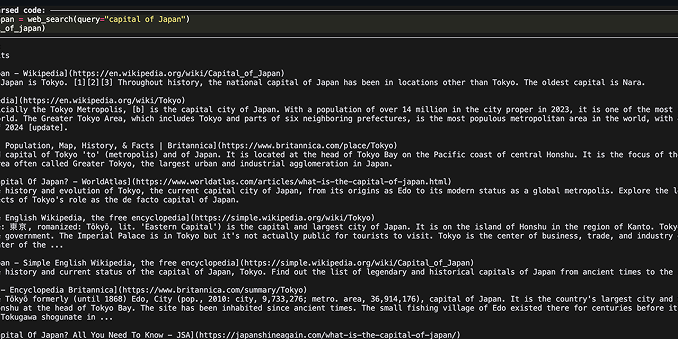

The Web Search Tool allows AI agents to retrieve real-time information from the web. It is built using Hugging Face's prebuilt DuckDuckGo search tool. With it, AI agents can answer broad user queries dynamically instead of relying solely on pre-trained knowledge. This is particularly useful for topics that require up-to-date information.

How the Code Works: You simply need to import the tool and give your agent access to it (see code below). The LLM will use it at will, the returning results will look like the image above.

Examples: Help users find updated product prices or reviews, e.g., "Best smartphone under $500?"

from smolagents import DuckDuckGoSearchTool

search_tool = DuckDuckGoSearchTool()

agent = CodeAgent(tools=[search_tool], model=model, add_base_tools=False, verbosity_level=2)

4/ User Input Tool

This Tool allows an AI agent to request additional information from the user. This can happen if the LLM does not have enough context to provide a correct response.

How the Code Works

- The _validate_question() function ensures that the question is a valid string and is not empty. If validation fails, an error is logged.

- The forward() function asks the user the validated question via a simple input() (change this to send to your front end) and stores the response.

- The user’s answer is checked (it could be empty), logged, and then returned to the LLM for further processing.

Example - A user is looking into their financial app, powered by AI. The user types: "Can you help me set up a budget for my expenses?" The LLM recognizes that it needs more details to generate a useful response, so it calls the User Input Tool to ask: "What is your monthly income?".

This code bellow is available in it’s entirety in the HuggingFace hub here.

class UserInputTool(Tool):

name = "user_input"

description = "Asks for user's input on a specific question"

inputs = {"question": {"type": "string", "description": "The question to ask the user"}}

output_type = "string"

def __init__(self):

super().__init__()

import logging

self.logger = logging.getLogger(__name__)

self.logger.setLevel(logging.INFO)

self.user_input = ""

async def _validate_question(self, question) -> tuple[bool, str]:

# Helper method to validate the question

async def forward(self, question: str) -> str:

# Validate the question first

success, response = await self._validate_question(question)

if not success:

self.logger.error(response)

return f"Error: {response}"

# Ask the validated question and ensure non-empty response

self.logger.info(f"Asking user: {question}")

while True:

self.user_input = input(f"{question} => Type your answer here:").strip()

if self.user_input:

break

print("Please provide a non-empty answer.")

self.logger.info(f"Received user input: {self.user_input}")

return self.user_input

5/ Final Answer Tool

The Final Answer Tool is responsible for delivering the AI’s response to the user. It does not format or modify the answer—its sole purpose is to send a formatted response. This code bellow is available in it’s entirety in the HuggingFace hub here.

How the Code Works:

- Takes a formatted answer as input (answer: str).

- Returns the answer as-is, without changes.

- Ensures that formatting happens beforehand, enforcing clean and structured responses.

class FinalAnswerTool(Tool):

name = "final_answer"

description = "This tool sends the final answer to the user. This tool does not format the answer. Format the answer before using this tool."

inputs = {'answer': {'type': 'any', 'description': 'A well formatted and short final answer to the user`s problem.'}}

output_type = "any"

def forward(self, answer: str) -> str:

return answer

Conclusion: SmolAgents Tools make AI Agents smarter

SmolAgents tools extend LLM capabilities by handling tasks like data retrieval, user input, and execution. Instead of relying solely on the LLM, we offload complex logic to deterministic tools, making AI agents faster, more reliable, and cost-efficient.

For tools to work effectively, they need:

- LLM-friendly error logging: Clear, actionable errors help the model retry intelligently.

- Context-rich tool outputs: Tools should return well-structured, human-readable responses.

- Detailed tool descriptions: Since descriptions guide the LLM, clarity improves tool usage.

On my next article, I’ll explore Final Answer Tools much deeper. I want to build a suite of tools that format data into UI-ready responses, including tables, structured lists, and graph-friendly outputs. This will bridge AI reasoning with frontend, ensuring SmolAgents deliver not just correct, but elegant answers.